A Conversational Future. Making technology adapt to us.

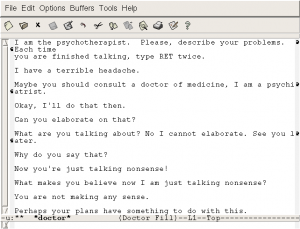

The first ‘Conversations’ between humans and technology started in the early 60’s with ‘Eliza’, a simple computer therapist chat application. It was an experiment in making natural language available to a computer. It worked by mainly answering a simple set of questions with questions. ‘How are you?' -- ‘That sounds great!’ -- ‘What are you doing later?’.

While ELIZA was capable of engaging in discourse, ELIZA could not converse with true understanding.

It’s always been conversation though; interfaces are by definition a communication or conversation between humans and technology. A keyboard provides a communication between an input and instruction to a word processing application, a mouse or stylus provides a constant conversation between a users movements and an intended instruction to a software.

In order to understand how to evolve human-machine interactions, its important to understand how human-human conversations work in IRL.

We attended a session this year at #SXSW where Google's Laura Granka explained the challenges they faced in natural language google search : "How do you ask for what you want’.

According to Granka, conversations generally have 3 phases that loop over and over again:

If you were to meet a stranger and start a conversation, it would go something like this:

- First a little back and forth to find context

- After context, meaning arises

- The conversation adapts and improves

This loops over and over adapting, branching and improving the conversation….

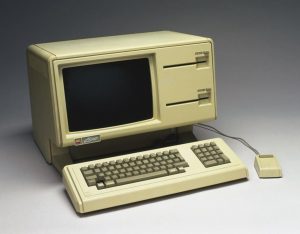

In the early years of computing, users had to ‘know how to ask’ a machine via command-line, this was a very specialist knowledge that limited the conversation and access to a handful of individuals.

Next came the GUI allowing visual representations of actions to be easily executed, dragging a file into trash-can deleted the file, textual menus allowed deeper navigation and access to other areas and functions.

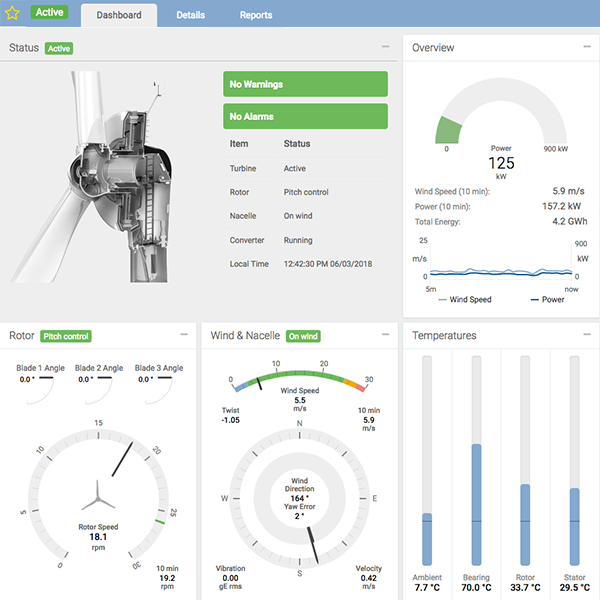

Move on 2018 and these conversations and interactions between humans and technology are becoming more ambient. By adding data (context) and input (sensory), our conversations now have deeper context as a result.

Furthermore the landscape is changing as smart devices (inputs) add meaning and context to conversations using location, touch, voice, motion, facial tracking and even mood in some cases.

The convergence of these technologies provides quicker access to answering the question users are trying to ask, and as as a result, we are slowly enabling machines to adapt to us.

Google's head of design, Google search and Assistant - Hector Ouilhet - gave us the example of trying to draw a dinosaur for his daughter on a tablet. An AI engine prompted him to serve a better drawing of what he was trying to draw to improve the quality of his conversation. While he was drawing, the AI engine was scanning through thousands of images in the background to understand 'what he was drawing' and how it could help 'improve the conversation'. Eventually, Hector was presented with a much better drawing of the same dinosaur, re-drawn in his own hand to allow him to continue his conversation with his daughter with an improved sketch to better illustrate his point.

The example above may sound a little 'big brother', with AI always listening-in on conversations through microphones, recording tactile interactions using trackpads and touch-screens and constantly pulling in context from search and social channels, but these 'silent technologies' already provide better experiences in most human-computer interactions. Just think of how Google search and maps serve content relevant to your location and search history, Facebook serves content relevant to your behaviour, opinions and demographic. You've probably already given your consent to Google, Apple and Facebook to mine and serve.

Hector continued to explain some of the UI choices they made to the Google homepage over the years. Initially, much like the first exchanges of a conversation, they added 'topics' to the top of the homepage to try to organise search into categories to help users 'ask for what they wanted' more easily. It became apparent over time that in a natural conversation or search you wouldn't limit search to predefined topics like video, sport, blogging, images etc; but rather 'just ask'. They realised that by taking context and history into account they were able to remove topics and channels completely and allow the machine to do the heavy lifting instead of the human.

At the heart of all of this lies the key takeout: 'user journeys guide experience'. Yup, that's H-E-A-R-T: Happiness, Engagement, Adoption, Retention & Task success.

As technology is improved to better track and read our needs (based on how we journey through in the cyber realm) the human-computer conversation deepens.

- Technology advances based on feedback from the human experience of their user journey.

- The key takeout here is user centered design requires the technology to adapt to the user and not the other way around.

- User interfaces more and more require the flexibility to adapt to individuals and evolve and change in real-time.